Retrieval Augmented Generation (RAG) system for a private knowledge base

A simple and clean implementation of Retrieval Augmented Generation (RAG) with open-source embedding models and LLaMA

Retrieval-Augmented Generation (RAG) for LLM [1] is a hot topic. It's a system designed to address the problem of LLMs lack of the knowledge of most recent events and facts, and lack of knowledge of private knowledge bases, such as internal documents for some project or product for a company. It's been widely adapted for building real-world applications build with LLM.

We are excited to introduce our most recent open-source project RAG-LLaMA, a simple and clean open-source implementation of Retrieval Augmented Generation (RAG). Using open-source embedding from SentenceTransformers [2] for retrieval, and the LLaMA chat model [3] for generation. With minimal dependencies on third-party tools.

Our toy project builds a RAG-based chatbot for answering questions about Tesla cars, based on the most recent Tesla car user manual documents.

[UPDATE 2024-03-31]: The project now supports fine-tuning the embedding model to solve Tesla car troubleshooting alert codes issue. Which was first discussed in a blog post by Teemu Sormunen Improve RAG performance on custom vocabulary

Background

Large language models (LLMs) like ChatGPT show potential in acquiring general knowledge on a broad list of topics. They can perform various tasks, such as writing essays, summarizing long documents, and writing computer code. However, several major problems affect the mass adaptation of LLMs in real-world applications.

These include:

Limited knowledge: LLMs are limited by the information they were trained on, especially in the pre-training phase. Due to the sheer size of the model, training or re-training the model to keep their knowledge current is both technically challenging and economically expensive. Even if re-training is not a problem, we have to consider the fact that not all data are publicly available. For example, business contracts or product design documents. Without access to this private data during training, LLMs could have no exposure to these private knowledge bases, thus making them less useful.

Hallucinations: While LLMs like ChatGPT or Gemini have shown great capabilities, they suffer from the major problem of hallucination, where they generate factually incorrect content. In fact, recent studies show that the hallucination rate is quite high with both public and open-source LLMs, often reaching as high as 30~40% [4].

RAG addresses these limitations by introducing an information retrieval component. When a user asks a question, RAG-based system will try to search an external knowledge base (like a database or specific documents) for information relevant to the question. The retrieved document or part of the document will be provided to the LLM along with the original question. With the additional context, LLM can generate a more informed, accurate, and relevant response. (This/The addition of context) effectively addresses the limited knowledge problem, and also reduces hallucinations, because we can ask LLM to only look for facts in the provided context.

How RAG Works

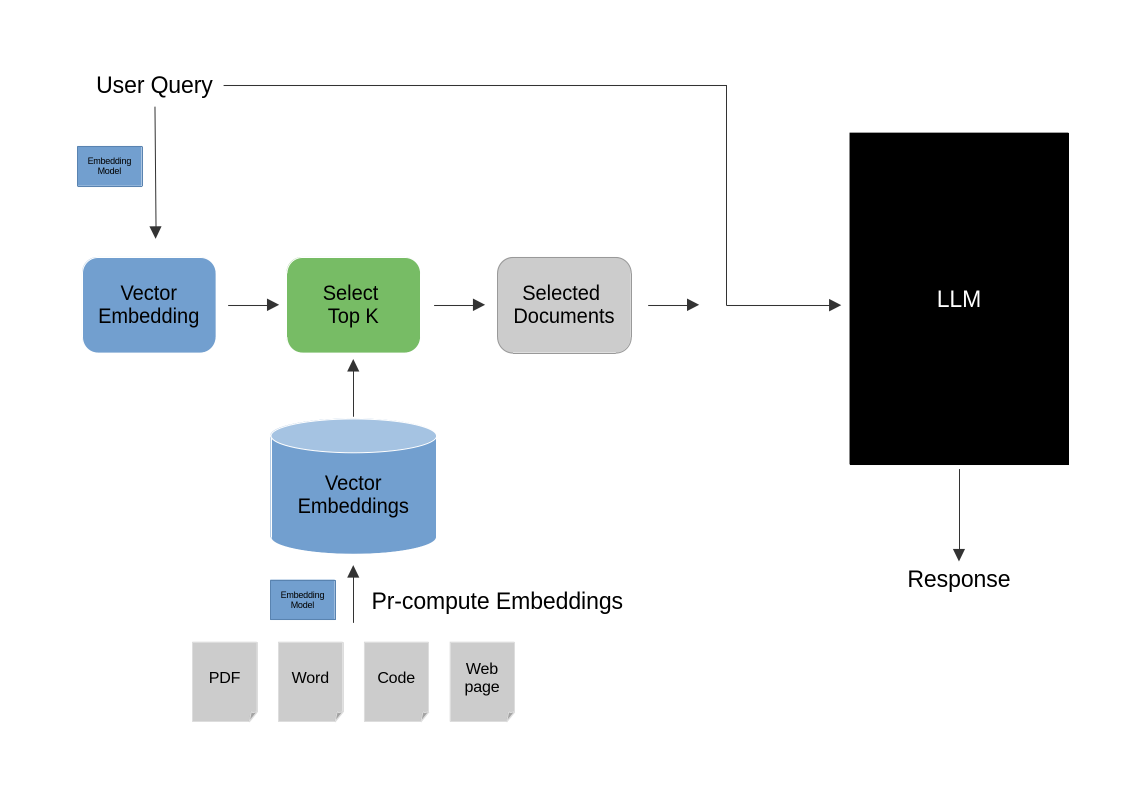

RAG operates through two primary stages: retrieval and generation. Here's a closer look at each:

Retrieval: The first stage of RAG involves a retrieval component, typically a system designed to locate pertinent documents or document segments from a vast knowledge repository in response to a user query.

Generation: Following the retrieval phase, the selected documents are combined with the original user query and then passed to a generation component, often an LLM (such as GPT).

Retrieval Component

Here's an overview of the retrieval component's operation. Upon receiving a user query, the retrieval component initially calculates relevance scores for each document or document segment within the existing knowledge base. Subsequently, it selects the top K most relevant documents based on these scores.

But how do we calculate relevant scores when the input is raw text? One simplistic method is to employ keyword-based search such as BM25 or Term Frequency-Inverse Document Frequency (TF-IDF), which considers factors like term frequency, document length, and term frequency. Keyword-based solution is suffice for simple cases. However, a more advanced and commonly adapted approach involves utilizing an embedding-based method. This method entails employing a specifically pre-trained embedding model to initially generate vectorized representations of the text (both query and documents). Subsequently, it calculates similarity scores by comparing these embedding vectors against the user query, which is also vectorized using the same embedding model. This idea is illustrated in Figure 1

These pre-trained embedding models are capable of encoding semantic information about text, including both queries and documents. This feature enables the retriever to understand not only the literal presence of words but also their contextual meanings. Embedding models exhibit superior generalization to unseen queries and documents due to their training on extensive corpora of text data. In contrast, keyword-based search methods may necessitate manually crafted rules tailored to each specific use case.

When employing embedding-based methods for retrieval, the vector representation of the knowledge base—often referred to as an embedding database—is pre-computed. This pre-computation saves computational resources and time during the actual retrieval process. Additionally, new embeddings can be added into the knowledge base to accommodate document updates or additions.

Commercially available embedding models, like those from OpenAI, exist. However, for this project, we leverage an open-source alternative. This is because commercial models often require sending data to the service provider for embedding computation. This raises governance and compliance concerns for sensitive data, as control is lost once it leaves the organization.

During inference, the user query is embedded on-demand using the same model that computes the knowledge base vector representations. This ensures consistency. We then calculate similarity scores between the query embedding and all embeddings in the knowledge base. Finally, we select the top K entries with the highest similarity scores.

The retrieval component's final output should include the original text of the document embeddings. These texts are merged with the user query and then sent to the generation component (LLM). Raw text is crucial for the LLM's tokenizer to compute an accurate representation, as LLMs cannot directly interpret vectorized embeddings.

Generation Component

The generation component is typically a large language model (LLM) such as ChatGPT or Gemini. Once we retrieve the documents, we need to provide them to the LLM so it can use this additional context to answer questions.

This process often involves constructing specific system prompt instructions to ensure the LLMs can understand the task.

For example, in our toy project, we use a simple prompt that instructs the LLM to answer questions about Tesla cars based on information found in user manuals.

You are an assistant to a Tesla customer support team. Your job is to answer customer's questions to the best of your ability. You will be provided with a set of documents delimited by triple quotes and a question. Avoid documents do not contain the information needed to answer this question. If an answer to the question is provided, it must be annotated with a citation. If multiple documents contain the same information, only use the one best matching this question.

We then need to augment the user prompt, which is done by adding the retrieved documents. Here's a simple example of how to do it. Note we replaced " with ` due to web display technical reasons.

f'```{doc_strs}```\n\nQuestion: {query}'

Finally, we can send both the augmented user prompt and the system prompt to the LLM and wait for its response.

Advanced RAG

The retrieval component plays an important role in RAG-based systems. The overall performance of the system depends on the accuracy and relevance of the retrieved documents. Imagine that LLMs might have a much higher hallucination rate if the retrieval component provides incorrect or irrelevant documents.

We discuss some of the advancement in RAG, like using reranking and Hypothetical Document Expansion (HyDE).

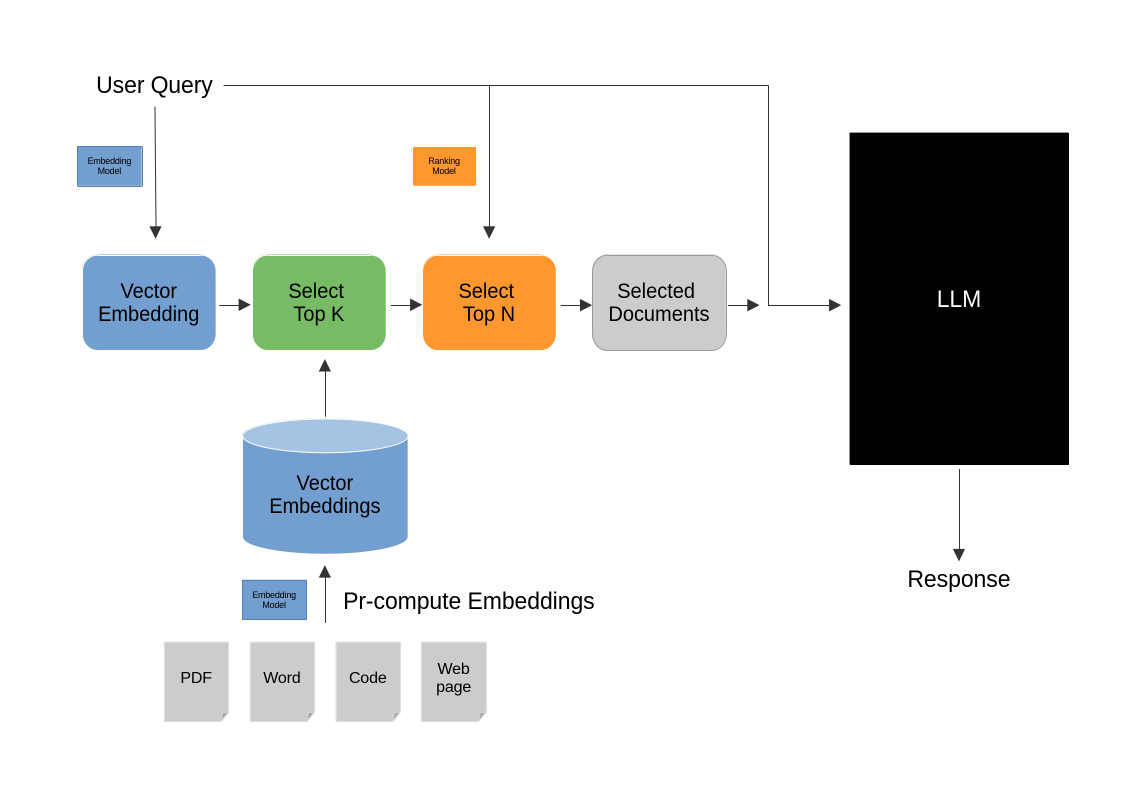

Retrieval with Reranking

One way to further improve the retrieval component is by adding an addtional ranking mechanism [5] into the retrieval system. This mechanism is often used after the system has selected the top K candidate embeddings. It involves using a ranking model to compute ranking scores for each retrieved document againt the user query. Since computeint the ranking scores over the entire knowledge base can be very slow.

In reranking, another model is used to compute ranking scores. This model can be either a specially trained one that outputs a scalar value for each user query-document pair, or a large language model (LLM) like GPT-4. For LLMs, the user query and each document are fed as input to compute the ranking scores.

For instance, when using GPT-4 for ranking scores, we could construct the system prompt like this:

You will be given a single question and a list documents. Your job is compute a ranking scores for each of the document against the question. The document with the highest relevancy to the question should have the highest score, while document with lowest relevancy should have the lowest score. The scores should be in the range of 0 to 10, with 10 being the highest score, and 0 the lowest. Return the scores along with the document text for each document using a dictionary structure.

Then construct the user prompt like this:

Question:\n{query}\n\n####\n\nDocuments:\n[{doc1_str}, {doc2_str}, ...]

When use reranking, we can leverage a much larger top K value, as calculating similarity scores is relatively fast. This allows the system to consider more documents and ultimately select those that best match the user query.

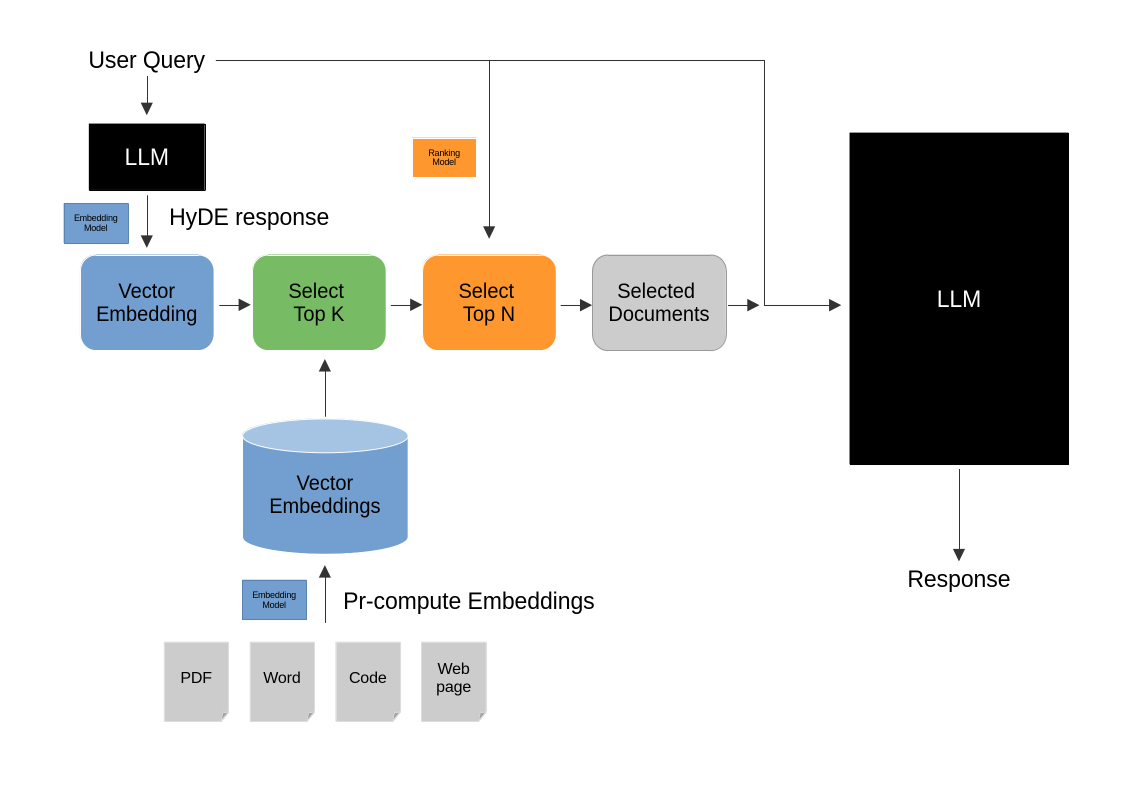

Retrieval with Hypothetical Document Expansion (HyDE)

Another way to improve the retrieval component is by adapting the Hypothetical Document Expansion (HyDE) mechanism [6]. With HyDE, instead of using the user query as input to compute embedding and then select the top K documents based on the similarity scores, we first ask an LLM to generate a "hypothetical" response to the user query. We then use this hypothetical response to compute embeddings and select the top K documents. This could be useful in some special domains, for example when the user query is too general, which means the standard retrieval mechanism not work.

The LLM we use for generating HyDE response could either be the same LLM we use as the generator, or it could be other ones, like GPT-4. Here is an example of how we could ask an LLM to generate such a response:

Please write a short passage to answer the question to the best of your ability. The passage should not exceed 200 words.\nQuestion: {query}\n\nPassage:

Our Toy Project - Tesla customer support assistant chatbot

We built our toy project based on the Tesla car user manual knowledge base, so we can use the LLM to answer questions about Tesla cars. We asked the same question and compare the model's geneartion with different retrieval components.

Simple questions

We start with a question on when to avoid using full self-driving on a Tesla car. We first used the LLM to generate a response with standard retrieval. The model generated a very good response, referencing the documents.

User

Under what circumstances that I should not use full self-driving on my Tesla car?Assistant (standard retrieval)

Based on the provided documents, there are several circumstances under which you should not use Full Self-Driving (Beta) on your Tesla car:

1. When driving in countries outside of the United States and Canada: As Full Self-Driving (Beta) deployment expands, Tesla will make it available to eligible customers in select countries outside of the United States and Canada. However, it is essential to be extra attentive and overly cautious when using Full Self-Driving (Beta) in these countries due to unique infrastructure, driving behaviors, and traffic patterns that the feature must adapt to over time.

2. When driving in limited Beta: Full Self-Driving (Beta) requires a fully attentive driver and displays a series of escalating warnings requiring driver response. You must keep your hands on the steering yoke (or steering wheel) while Full Self-Driving (Beta) is engaged. In addition, the cabin camera monitors driver attentiveness. Therefore, you should not use Full Self-Driving (Beta) in limited Beta if you are not willing or able to pay constant attention to the road and be prepared to act immediately, especially around blind corners, crossing intersections, and in narrow driving situations.

3. When driving in areas with poor road conditions: Full Self-Driving (Beta) relies on visual and radar signals to navigate, so it may not work well in areas with poor road conditions, such as construction zones, potholes, or heavy snow. In these situations, it is essential to take control of the vehicle and drive manually.

4. When driving in areas with low visibility: Full Self-Driving (Beta) may have difficulty detecting pedestrians, cyclists, or other vehicles in low-visibility conditions, such as heavy rain, snow, or fog. In these situations, it is essential to be extra cautious and take control of the vehicle if necessary.

5. When driving in areas with complex intersections: Full Self-Driving (Beta) may have difficulty navigating complex intersections, such as those with multiple lanes, turns, or pedestrian crossings. In these situations, it is essential to be attentive and take control of the vehicle if necessary.

We then asked the same question with reranking retrieval, and here's the output. The model generates a much better response, which covers more situations.

User

Under what circumstances that I should not use full self-driving on my Tesla car?Assistant (retrieval with reranking)

Based on the provided documents, there are several circumstances under which you should not use Full Self-Driving (Beta) on your Tesla car:

1. Low visibility: Full Self-Driving (Beta) relies on visibility to operate effectively, so in low-light conditions such as rain, snow, direct sun, fog, etc., it is recommended to take over and drive manually.

2. Interactions with pedestrians, bicyclists, and other road users: Full Self-Driving (Beta) may not be able to detect and respond to these road users effectively, so it is important to be ready to take over and drive manually in these situations.

3. Unprotected turns with high-speed cross traffic: Full Self-Driving (Beta) may not be able to handle turns with high-speed cross traffic, so it is important to be ready to take over and drive manually in these situations.

4. Multi-lane turns: Full Self-Driving (Beta) may not be able to handle multi-lane turns, so it is important to be ready to take over and drive manually in these situations.

5. Simultaneous lane changes: Full Self-Driving (Beta) may not be able to handle simultaneous lane changes, so it is important to be ready to take over and drive manually in these situations.

6. Narrow roads with oncoming cars or double-parked vehicles: Full Self-Driving (Beta) may not be able to handle narrow roads with oncoming cars or double-parked vehicles, so it is important to be ready to take over and drive manually in these situations.

7. Rare objects such as trailers, ramps, cargo, open doors, etc. protruding from vehicles: Full Self-Driving (Beta) may not be able to detect and respond to these objects effectively, so it is important to be ready to take over and drive manually in these situations.

8. Merges onto high-traffic, high-speed roads: Full Self-Driving (Beta) may not be able to handle merges onto high-traffic, high-speed roads, so it is important to be ready to take over and drive manually in these situations.

9. Debris in the road: Full Self-Driving (Beta) may not ...truncated due to tokens reached maximum length

We then asked another question about how to open a Tesla car door in a low-power situation. Again, we ran the query through the LLM with standard retrieval. The model's response is good, it correctly find the answer of how to open both front and rear doors in low power situation.

User

How to open the door of a Tesla Model S car when the power is very low?Assistant (standard retrieval)

According to the provided documents, there are a few ways to open the doors of a Tesla Model S car when the power is very low:

1. Using the button located at the top of the interior door handle: This is the recommended method to open the doors when Model S has no power. Press the button and push the door open. (Page 29)

2. Pulling up the manual door release located in front of the window switches: This method can be used in the unlikely situation when Model S has no power. Pull up the manual door release and open the front door. (Page 226)

3. Folding back the edge of the carpet below the rear seats to expose the mechanical release cable: In the unlikely situation when Model S has no power, you can use this method to open the rear door. Pull the mechanical release cable toward the center of the vehicle. (Page 226-227)

It is important to note that the interior release switch may be disabled if child-protection locks are turned on or if Model S is moving. In such cases, you will not be able to open the doors using this method.

We then asked the same question with reranking retrieval, and here's the output. The response is not as good as the standard retrieval, as it didn't include the answer for opening front doot in low power situation.

User

How to open the door of a Tesla Model S car when the power is very low?Assistant (retrieval with reranking)

According to the provided documents, there are two methods to open the doors of a Tesla Model S car when the power is very low:

1. Using the button located at the top of the interior door handle: This method can be used to open the doors from the interior of the car, even when the power is very low. To open a door using this method, press the button located at the top of the interior door handle and push the door open. (Citation: Page 29 of the Model S 2021 Owner's Manual)

2. Folding back the edge of the carpet below the rear seats to expose the mechanical release cable: In the unlikely situation when Model S has no power, this method can be used to open the rear door. To open the rear door using this method, fold back the edge of the carpet below the rear seats to expose the mechanical release cable. Pull the mechanical release cable toward the center of the vehicle to open the door. (Citation: Page 226-227 of the Model S 2021 Owner's Manual)

It is important to note that the manual door releases are designed to be used only in situations when Model S has no power. When Model S has power, use the button located at the top of the interior door handle. (Citation: Page 213-214 of the Model S 2012-2020 Owner's Manual)

Hard questions

We start by asking some hard questions about alert codes for Tesla cars, where the model has difficulty understanding and thus generates poor responses. This is because alert codes like "app_w222" and "bms_a069" are not standard English words, so the embedding model does not treat them as single entities. In this case, both standard retrieval and retrieval with reranking select incorrect documents, with reranking performing worse.

For example, the alert code "app_w222" stands for "Cruise control unavailable Reduced front camera visibility", which means "Traffic-Aware Cruise Control and Autosteer are unavailable because one or more of the front cameras in your vehicle is blocked or blinded by external conditions."

This is the response of the standard retrieval; it gets close to identifying the issue as "Traffic-Aware Cruise Control," but it's not quite right. The generated outputs are even worse when using reranking or HyDE-based retrieval.

User

I see code app_w222 on my dashboard what should I do?Assistant (standard retrieval)

Based on the provided documents, if you see code APP_w222 on your dashboard, it means that the Traffic-Aware Cruise Control and Autosteer features are unavailable due to reduced front radar visibility. According to the documents, this could be caused by factors such as snow, ice, dirt, or mud obstructing the radar located in the front bumper area of your vehicle. To address this issue, the documents suggest the following steps:

1. Continue driving your vehicle as usual, as it is safe to do so.

2. Check the front bumper area for any obstructions and attempt to clear them. If there are any dirt or debris, refer to the section on cleaning a camera on page 182 of the owner's manual for more information.

3. If the alert persists throughout your drive, examine the front bumper before your next planned drive and attempt to clear any obstruction.

4. If the alert persists throughout subsequent drives but no obstruction is visible on the front bumper where the radar is located, schedule service at your earliest convenience.

It is important to note that if you experience any difficulty or uncertainty in addressing the issue, you should consult a professional mechanic or contact Tesla's customer service for further assistance.

We then tried a hybrid retrieval approach, where we used a mixture of standard retrieval with a keyword-based method (BM25). This is because the alert code is a keyword, while standard retrieval might struggle to understand it; however, a keyword-based approach should be able to correctly identify it. This hybrid retrieval works great for this specific problem, as the model can now answer the question correctly.

User

I see code app_w222 on my dashboard what should I do?Assistant (hybrid retrieval)

Based on the provided documents, if you see code app_w222 on your dashboard, it means that Traffic-Aware Cruise Control and Autosteer are unavailable due to reduced front camera visibility. According to the documents, this could be caused by external conditions such as dirt, debris, or environmental factors like rain, fog, or bright sunlight. To resolve the issue, you can try the following steps:

1. Inspect and clean the front camera area at the top center of the windshield before your next planned drive.

2. Check the camera surface for condensation, dirt, or other debris and attempt to clear any obstruction.

If the alert persists throughout subsequent drives but no front camera obstruction is visible, you may need to schedule service at your earliest convenience.

It is important to note that if the issue is not resolved, it may be a sign of a more serious problem that requires professional attention.

Please refer to the owner's manual for more information on cleaning the front camera area and troubleshooting alerts related to the electrical system.

Similar results can be observed by asking a question with a different alert code "bms_a069" is for Battery charge level low Charge now, which means Your vehicle has detected that the high voltage battery does not have enough energy remaining to support driving. This alert is usually present because your vehicle's high voltage battery charge level has been reduced through normal operation. Agagin, the LLM using hybrid retrieval can answer the question correctly.

User

What does bms_a069 mean?Assistant (standard retrieval)

According to the provided documents, BMS_a069 is an alert that appears on the vehicle's display when the battery's maximum charge level and range may be reduced. This alert is similar to BMS_u066, which was discussed in the previous question.

The document "MODEL S 2021 + OWNER'S MANUAL" on page 230-231 explains that this alert means that the vehicle has detected a condition internal to the high voltage battery that is limiting the battery's performance. As a result, the maximum charge level and range may be reduced. The alert suggests scheduling service soon to restore full performance.

Therefore, based on the information provided in the documents, BMS_a069 means that the vehicle's high voltage battery is not functioning at its full capacity, and service is required to address the issue.

User

What does bms_a069 mean?Assistant (hybrid retrieval)

As an assistant to the Tesla customer support team, I can provide you with the answer to your question. BMS_a069 is an abbreviation used in the Tesla Model S owner's manual, and it refers to a specific troubleshooting alert.

According to the manual, BMS_a069 means "Battery charge level low - Charge now." This alert is triggered when the vehicle's high voltage battery charge level has been reduced to a certain threshold, indicating that the battery needs to be charged immediately to avoid any issues with the vehicle's performance or safety.

It's important to note that if this alert is present while driving, the vehicle may shut down to prevent any potential safety issues. Therefore, it's essential to charge the vehicle as soon as possible if this alert appears. If the alert persists after charging, it's recommended to schedule service at the earliest convenience to ensure the high voltage battery is functioning properly.

I hope this information helps clarify what BMS_a069 means in the context of the Tesla Model S owner's manual. If you have any further questions, please don't hesitate to ask.

Challenges of implementing RAG based systems

Data Preperation and Extraction

One of the biggest challenges of building a Rule-Action-Graph (RAG) based system is preparing data for the knowledge base. Unlike data stored in relational databases, our source data often comes from unstructured formats like corporate documents (PDFs, Word documents), web pages, and even codebases.

Extracting text from these diverse sources is the most time-consuming and labor-intensive aspect. Different documents and web pages have varying structures and layouts, making a one-size-fits-all automated solution impractical. A solution effective for one document might not work for a different client or project. For instance, we spent nearly two days fine-tuning PDF text extraction tools to properly handle the structure of Tesla user manuals. This involved reading the manuals page-by-page to understand the structure and then writing code to extract the text and address all edge cases. Unfortunately, this tailored approach wouldn't be applicable to projects involving documents with different structures or layouts.

In addition to the challenge of dealing with various data sources and file types, the data preparation and extraction phase also faces another hurdle: how to correctly convert very long text into smaller, manageable chunks. This is necessary because Large Language Models (LLMs) often have limitations on context length, typically ranging from 4,000 to 16,000 tokens. Therefore, we need to limit the length of the input query.

Beyond this context length restriction, there's another reason to segment long documents. Including irrelevant information can decrease the overall performance of LLMs. Finally, processing lengthy user prompts or contexts can slow down response generation due to performance limitations.

We chose Tesla car user manuals for a specific reason: each topic or section typically contains around 200-300 words. This allows us to extract text section-by-section and select the top 3 most relevant documents during retrieval. This approach ensures that most LLMs can easily handle the context length.

Choosing embedding model

The embedding model plays a crucial role in the retrieval component, significantly impacting its overall accuracy. We have various options available, including commercial embedding models, open-source alternatives, or training custom models, each with its own advantages and disadvantages.

Commercial embedding models, such as those offered by OpenAI and other cloud-based providers, offer easy accessibility. Setting up an API key to access these services is quick, and their widespread use across industries ensures reliable performance. However, they come at a cost, often based on token usage. Depending on the size of your knowledge base and the frequency of system usage, expenses can vary. Additionally, reliance on cloud-based solutions raises concerns about data privacy and compliance, especially for sensitive or classified information.

Open-source embedding models, like those provided by SentenceTransformers, have gained traction recently. While setup may be more time-consuming, they offer cost-free usage with no additional charges, subject to model licensing terms. However, running these models may require additional computational resources, and their performance may not match that of commercial counterparts.

For cases where existing models are inadequate, training a custom embedding model tailored to specific needs becomes an option. This approach is suitable when dealing with unique knowledge bases not well-addressed by commercial or open-source solutions. For example the knowledge base might have custom vocabulary which the existing embedding model couldn't understand. However, it involves significant time and labor to prepare the extensive training data required and demands substantial computational resources for model training.

Summary

While RAG-based systems can effectively tackle the issue of limited knowledge in LLM and potentially reduce hallucinations, implementing such a system still presents significant challenges. This is especially true in the data preparation and extraction phase, owing to the diverse data sources and structures involved, which may necessitate labor-intensive work.

New open-source models and tools might help address these issues and greatly reduce the time and effort required to implement RAG-based systems in the future.